“Any noise annoys an oyster, but a noisy noise annoys an oyster most.”

– Tongue twister, author unknown

As the Python programming language continues to grow in popularity, so too does the accumulation of issues and pull requests (“PRs”) on the CPython GitHub repository. At time of writing (morning of 7th May, 2022), the total stands at 7,027 open issues, and 1,471 pull requests. At the 2022 Python Language Summit, CPython Core Developer Irit Katriel gave a talk on possible ways forward for dealing with the backlog.

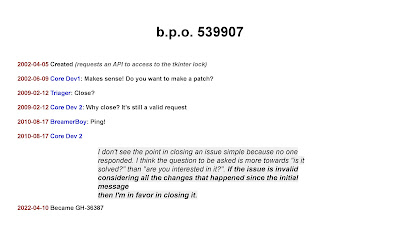

Historically, there has been reluctance among CPython’s team of core developers to close issues or PRs that may be of dubious worth. BPO-539907 was presented to the audience as an issue that had remained open on the issue tracker for over 20 years. The example is an extreme one, but represents a pattern that anybody who has scrolled through the CPython issue tracker will surely have seen before:

Anyone with experience in triaging issue trackers in open source will know that it is not always easy to close an issue. People on the internet do not always take kindly to being told that something they believe to be a bug is, in fact, intended behaviour.

Low-quality feature requests can be even harder to tackle, and can be broadly split into three buckets. The first bucket holds feature requests that simply make no sense, or else would have actively harmful impacts – these can be fairly easily closed. The second bucket holds feature requests that would add maintenance costs, but would realistically add little value to end users. These can often lead to tiresome back-and-forths – something one person may see as a large problem in a piece of software may, ultimately, cause few problems for the majority of users.

The feature requests that can linger on an issue tracker for twenty years, however, are usually those in the third bucket: features that everybody can agree might be nice if, in an ideal world, they were implemented – but that nobody, ultimately, has the time or motivation to work on.

Katriel’s contention is that leaving an issue open on the tracker for 20 years serves no one and that, instead, we should think harder about what an issue tracker is actually for.

If the proposed tkinter lock from BPO-539907 is ever implemented, Katriel, argues, “it’s not because of the twenty-year-old issue – it’s because somebody will discover the need for it.” Rather than only closing issues that have obvious defects, we should flip the script, and become far more willing to close issues if they serve no obvious purpose. Issues should only be kept open if they serve an obvious purpose in helping further CPython’s development. Instead of asking “Why should we close this?”, we should instead ask, “Why should we keep this open?”

Citing a recent blog post by Sam Schillace, Katriel argues that not only do issues such as BPO-539907 (newly renamed as GH-36387, for those keeping tabs) serve little purpose – they also do active harm to the CPython project. Schillace argues that the problem of the “noisy monitor” – a term he uses for any kind of feedback system where it becomes impossible to tell the signal from the noise – is “one of the most pernicious, and common, patterns that engineering teams fall prey to”. Leaving low-quality issues on a tracker, Shillace argues, wastes the time of developers and triagers, and “obscures both newer quality issues as well as the overall drift of the product.”

“It’s far better… to keep the tool clean for the things that matter.”

– Sam Schillace, Noisy Monitors

No one has done more work than Katriel over the past few years to keep the issue tracker healthy, and her presentation was well received by the audience of core devs and triagers. The question of where now to proceed, however, is harder to tackle.

Pablo Galindo Salgado, an expert on CPython’s PEG parser, and the chief architect behind the “Better error messages” project in recent years, noted that he received “many, many issues” relating to possible changes to the parser and improvements to error messages. “Every time you close an issue,” he said, “People demand an explanation.” Arguing that maintainer time is “the most valuable resource” in open-source software, Salgado said that the easiest option was often just to leave it open.

However, hard though it may be to close an issue, ignoring open issues for an extended period of time also does a disservice to contributors. Itamar Ostricher – not a CPython core developer, but an experienced software engineer who has worked for many years at Meta – said that the contributor experience was “often confusing”. “Is this an issue where a PR would be accepted if I wrote one? Does a core dev want to work on it?” Ostricher asked. Or is it just a bad idea?

Ned Deily, release manager for Python 3.6 and 3.7, agreed, and argued that CPython needed to become more consistent in how core devs treat issues and PRs. Some modules, like tkinter, have been “ownerless” for a long time, Deily argued. This can create a chicken-and-egg problem. If a module has no maintainer, the solution is to add more maintainers. But a contributor can only become a maintainer if they demonstrate their worth through a series of merged PRs. And if a module has no active maintainer, there may be no core devs who feel they have sufficient expertise to review and merge a PR relating to the unmaintained module. So the contributor can never become a core developer (as their PRs will never be merged), and the module will never gain a new maintainer.

Where now?

Various solutions were proposed to improve the situation. Katriel thought it would be good to introduce a new “Accepted” label, that could be added by a triager or a core developer. The idea is that the presence of the label signifies that the core developer team is not waiting for any further information from the issue filer: the bug report (or feature request) has been acknowledged as valid.

Many attendees noted that the problem was in many ways a social problem rather than a technical problem: the core development team needed a fundamental change in mindset if they were to seriously tackle the issue backlog. Senthil Kumaran argued that we should “err on the side of closing things”. Jelle Zijlstra similarly argued that we needed to reach a place where it was understood to be “okay” to close a feature request that had been open for many years with no activity.

There was also, however, interest in improving workflow automation. Christian Heimes discussed the difficulty of closing an issue or PR if you are a core developer with English as a second language. Crafting the nuances of a rejection notice so that it is polite but also clear can be a challenging task. Ideas around automated messages from bots or canned responses were discussed.

The enormity of the task at hand is clear. Unfortunately, there is probably not one easy fix that will solve the problem.

Things are already moving in a better direction, however, in many respects. Łukasz Langa, CPython’s Developer-In-Residence, has been having a huge impact in stabilising the number of open issues. The CPython triage team, a group of volunteers helping the core developers maintain CPython, has also been significantly expanded in recent months, increasing the workforce available to triage and close issues and PRs.

PEP 594, deprecating several standard-library modules that have been effectively unmaintained for many years, also led to a large number of issues and PRs being closed in recent months. And the transition to GitHub issues itself, which only took place a few weeks ago, appears to have imbued the triage team with a new sense of energy.

Discussion continues on Discourse about further potential ways forward:

These were a series of short talks, each lasting around five minutes.

Read the rest of the 2022 Python Language Summit coverage here.

Lazy imports, with Carl Meyer

Carl Meyer, an engineer at Instagram, presented on a proposal that has since blossomed into PEP 690: lazy imports, a feature that has already been implemented in Cinder, Instagram’s performance-optimised fork of CPython 3.8.

What’s a lazy import? Meyer explained that the core difference with lazy imports is that the import does not happen until the imported object is referenced.

Examples

In the following Python module,

spam.py, with lazy imports activated, the moduleeggswould never in fact be imported sinceeggsis never referenced after the import:And in this Python module,

ham.py, with lazy imports activated, the functionbacon_functionis imported – but only right at the end of the script, after we’ve completed a for-loop that’s taken a very long time to finish:Meyer revealed that the Instagram team’s work on lazy imports had resulted in startup time improvements of up to 70%, memory usage improvements of up to 40%, and the elimination of almost all import cycles within their code base. (This last point will be music to the ears of anybody who has worked on a Python project larger than a few modules.)

Downsides

Meyer also laid out a number of costs to having lazy imports. Lazy imports create the risk that

ImportError(or any other error resulting from an unsuccessful import) could potentially be raised… anywhere. Import side effects could also become “even less predictable than they already weren’t”.Lastly, Meyer noted, “If you’re not careful, your code might implicitly start to require it”. In other words, you might unexpectedly reach a stage where – because your code has been using lazy imports – it now no longer runs without the feature enabled, because your code base has become a huge, tangled mess of cyclic imports.

Where next for lazy imports?

Python users who have opinions either for or against the proposal are encouraged to join the discussion on discuss.python.org.

Python-Dev versus Discourse, with Thomas Wouters

This was less of a talk, and more of an announcement.

Historically, if somebody wanted to make a significant change to CPython, they were required to post on the python-dev mailing list. The Steering Council now views the alternative venue for discussion, discuss.python.org, to be a superior forum in many respects.

Thomas Wouters, Core Developer and Steering Council member, said that the Steering Council was planning on loosening the requirements, stated in several places, that emails had to be sent to python-dev in order to make certain changes. Instead, they were hoping that discuss.python.org would become the authoritative discussion forum in the years to come.

Asks from Pyston, with Kevin Modzelewski

Kevin Modzelewski, core developer of the Pyston project, gave a short presentation on ways forward for CPython optimisations. Pyston is a performance-oriented fork of CPython 3.8.12.

Modzelewski argued that CPython needed better benchmarks; the existing benchmarks on pyperformance were “not great”. Modzelewski also warned that his “unsubstantiated hunch” was that the Faster CPython team had already accomplished “greater than one-half” of the optimisations that could be achieved within the current constraints. Modzelewski encouraged the attendees to consider future optimisations that might cause backwards-incompatible behaviour changes.

Core Development and the PSF, with Thomas Wouters

This was another short announcement from Thomas Wouters on behalf of the Steering Council. After sponsorship from Google providing funding for the first ever CPython Developer-In-Residence (Łukasz Langa), Meta has provided sponsorship for a second year. The Steering Council also now has sufficient funds to hire a second Developer-In-Residence – and attendees were notified that they were open to the idea of hiring somebody who was not currently a core developer.

“Forward classes”, with Larry Hastings

Larry Hastings, CPython core developer, gave a brief presentation on a proposal he had sent round to the python-dev mailing list in recent days: a “forward class” declaration that would avoid all issues with two competing

typingPEPs: PEP 563 and PEP 649. In brief, the proposed syntax would look something like this:In theory, according to Hastings, this syntax could avoid issues around runtime evaluation of annotations that have plagued PEP 563, while also circumventing many of the edge cases that unexpectedly fail in a world where PEP 649 is implemented.

The idea was in its early stages, and reaction to the proposal was mixed. The next day, at the Typing Summit, there was more enthusiasm voiced for a plan laid out by Carl Meyer for a tweaked version of Hastings’s earlier attempt at solving this problem: PEP 649.

Better fields access, with Samuel Colvin

Samuel Colvin, maintainer of the Pydantic library, gave a short presentation on a proposal (recently discussed on discuss.python.org) to reduce name clashes between field names in a subclass, and method names in a base class.

The problem is simple. Suppose you’re a maintainer of a library,

whatever_library. You release Version 1 of your library, and one user start to use your library to make classes like the following:Both the user and the maintainer are happy, until the maintainer releases Version 2 of the library. Version 2 adds a method,

.fields()to BaseModel, which will print out all the field names of a subclass. But this creates a name clash with your user’s existing code, wich hasfieldsas the name of an instance attribute rather than a method.Colvin briefly sketched out an idea for a new way of looking up names that would make it unambiguous whether the name being accessed was a method or attribute.